Latency

Understanding and reducing latency in live streaming

What is Latency

Latency is the term used to define the amount of delay for the processing and transmission of data from one point to another.

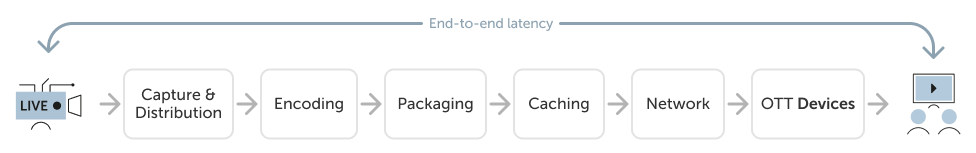

End-to-end latency in live streaming refers to the time it takes for a live video signal to be captured by a camera, transmitted over a network, and displayed on a viewer's screen.

The term “glass to glass” refers to this production workflow from the camera lens to the moment it is displayed on a screen. End-to-end latency includes the time it takes to encode the video signal, transmit it over the network, and decode it on the viewer's device.

End-to-end latency in a live video workflow

Latency is introduced throughout the entire broadcast chain. The amount of latency introduced at each stage can vary depending on many factors, including the types of technology being used, the implementation of said technologies and often on both how the product and transmission equipment is configured. Reducing the latency in streaming is desirable for live events, as it minimizes the delay between the live event and the viewer's viewing experience.

Latency introduced in video streaming

Before the introduction of low latency formats and optimization of software architecture, a typical end-to-end OTT streaming platform would introduce 40 seconds plus of delay from the source to the output of the player.

With the latest improvement inMK.IO, the delay from encoding to playback has now been reduced to 25 seconds.

Additional enhancements are on the way, utilizing standardized low-latency streaming to reduce the latency by a factor of three.

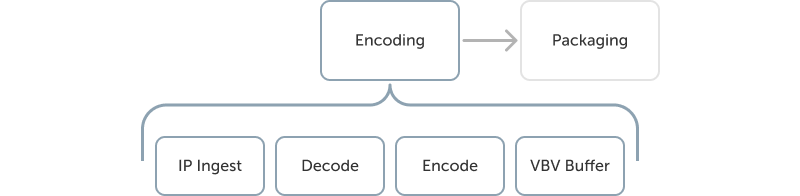

Delay in the encoder

The delay in the Live Encoder is the sum of the delay necessary on the decoding part, the encoding part, and the output part.

Latency overview in the encoder

At the input of the live encoder, an IP input buffer compensates for network jitter and out-of-order packets. Then the MPEG-2 TS input is decoded. The decode delay will vary depending on the format.

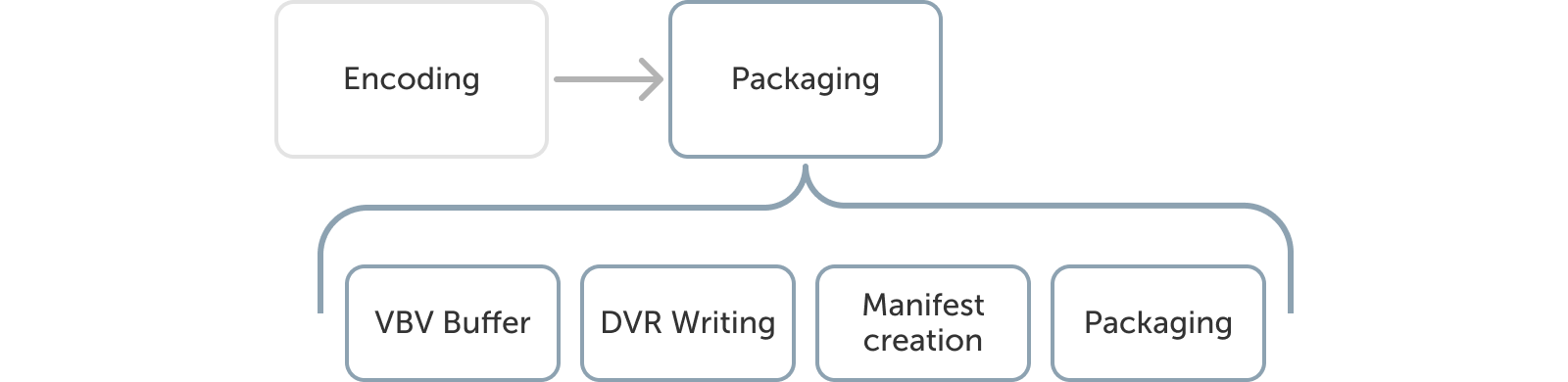

Delay in the packager

Latency overview in the packager

Content is first written to the DVR before being packaged and played out. Then, CMAF chunks are issued as soon as they are completed by the Just-In-Time Packager (JITP). The packaged output cannot be requested and delivered until the Manifest is generated and delivered to the player.

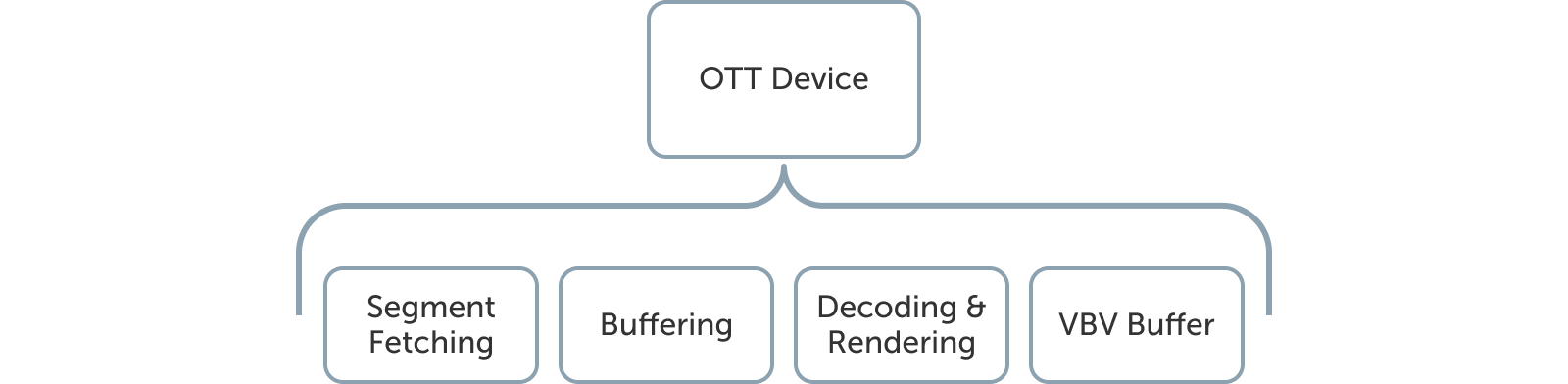

Delay introduced at player level

Latency overview in the player

The player needs to fetch video segments over the network, each segment is several seconds long. Then, to ensure a smooth user experience, the player waits until it has multiple segments before beginning playback. This buffer helps prevent interruptions caused by network fluctuations. Finally, the player decodes and renders the video frames and audio samples on the user's device.

Updated 3 months ago